Camera Selection for Microscopy

By Mike Fussell, Marketing Team

Last updated on May 24, 2024

Camera selection can have a big impact on the capabilities of your microscope system. Selecting a camera that matches your requirements will insure you have the performance you need to capture good data quickly without paying more for capabilities that is not required. The key to selecting the most appropriate camera for your application is understanding and balancing tradeoffs between performance specifications. This article introduces the most important camera specs and how they can be used to guide the camera selection process.

| Property | Why it Matters | What to look for |

|---|---|---|

| Quantum Efficiency | Better light gathering efficiency improves detection of weak signals and helps increase throughput by capturing bright images with faster exposures. | Higher is better |

| Read Noise | Clearer images with less noise improves detection of weak signals. | Lower is better |

| Temporal Dark Current | Lowers noise floor on long exposure images for improved detection of low intensity signals. | Lower is better |

| Absolute Sensitivity Threshold | The lowest intensity signal which can be reliably detected above the noise floor. Useful for evaluating trade-offs between sources of image noise and light gathering efficiency. | Lower is better |

| Pixel Size | Larger pixels capture more light at the expense of resolution. Pixels smaller than diffraction limit will not improve image quality. | Application Dependent |

| Sensor Size | Larger sensors enable a larger field of view for faster scanning of large areas. A sensor too much larger than the field number wastes sensor area. | Application Dependent |

Table 1: Summarized camera properties and why they matter.

Color vs Monochrome

Color camera pixels are restricted to red, green or blue wavelengths, while monochrome camera pixels gather light from their full range of wavelengths at every pixel. This enables monochrome cameras to make better use of available light, resulting in superior low-light imaging capabilities.

Since color cameras only gather red, green or blue light at each pixel, reconstructing an image with all three wavelengths for every pixel requires processing to infer the missing values based on the values of adjacent pixels. This interpolation process results in a loss of effective spatial resolution. Interpolation is not required for monochrome cameras, resulting in greater spatial resolution.

For fluorescence microscopy, monochrome cameras are strongly recommended as they will deliver better spatial resolution and have greater light gathering efficiency over a wider range of wavelengths than colour cameras.

Pixel size, resolution and sensor size

Pixel size, sensor resolution, and sensor size are traded off against each other. For a given sensor area, a sensor with larger pixels will capture more light per pixel but will output lower resolution images. A higher resolution sensor may capture more detail, but its smaller resolution pixels will not collect as much light.

Select your camera to match your optics. A camera with a sensor that is larger than your field number will result in wasted area on the sensor. A camera with a sensor that is much smaller than your field number will restrict your field of view, increasing your scanning time for a given sample area. Increasing image sensor resolution at the expense of smaller pixel sizes will cease to deliver an increase in spatial resolution once the pixel size shrinks below the resolution of your optics.

On-camera pixel binning is a useful capability which can combine groups of adjacent pixels into a single large virtual pixel. While this will decrease the resolution of the image captured, it will enable higher frame rates and a lower absolute sensitivity threshold. This increased speed and sensitivity is ideal for investigating phenomena like blood flow and intracellular Ca2+ dynamics. On-sensor binning is more effective at reducing noise than binning carried out in the camera's image processing system.

Sensitivity and read noise

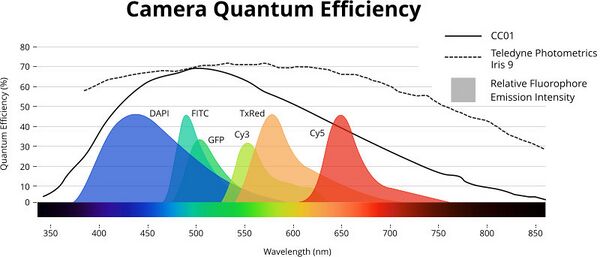

Quantum efficiency (QE) is a measure of how efficiently an image sensor can convert incoming photons into an output signal. It is widely used as a measure of sensitivity. Quantum efficiency is wavelength dependent, and peaks around 525 nm for CMOS and CCD cameras with silicon sensors. Innovations in pixel design and sensor manufacturing techniques have yielded improvements in overall light gathering efficiency of newer sensors, and extended their useful wavelength range further into the near-infrared. Higher end cameras from manufacturers including Photometrics, Hamamatsu and PCO typically have a higher peak QE and and maintain a higher QE across a wider wavelength range (Fig 1) than CC01. Selecting a camera with a high QE for the emission wavelengths of your fluorophores will yield a higher intensity signal and enable shorter exposure times.

Read-noise is a measure of how accurately the charges on a sensor are converted into a digital signal. Many "scientific grade" CMOS (sCMOS) cameras will have a significantly lower read noise than CC01. This translates into more reliable detection of lower intensity signals above the noise floor of the image. The weakest signal that can be reliably distinguished from the image noise is the Absolute Sensitivity Threshold (AST). The lower a camera's AST is, the weaker the signal it can reliably detect.

Over long exposures, CMOS image sensors will slowly accumulate random Temporal Dark Noise (TDN). Image stacking techniques developed by deep-space astronomers have overcome this phenomenon by leveraging the low read noise of CMOS sensors. Capturing several shorter duration exposures and averaging them results in the random TDN being averaged out, while only the desired signal remains.

| Property | CC01 | Photometrics Iris 9 |

|---|---|---|

| Resolution | 2.3 MP (1920 × 1200) | 9 MP (2960 x 2960) |

| Interface | USB 3.1 | USB 3.1 Gen 1 |

| Frame Rate* | 41 FPS | 30 FPS |

| Pixel Size | 5.86 µm | 4.25 µm |

| Sensor Size | 13.4 mm diagonal | 17.8 mm diagonal |

| Read Noise | 6.97e | 1.5e |

| Peak Quantum Efficiency (QE) | 70% | > 73% |

| Camera lens mount | C-Mount | C-Mount |

| Advantages | Higher frame rate Shorter image processing time Larger pixels Lower cost More onboard IO functions | Higher resolution Higher QE over a wider range of wavelengths Lower read noise Higher absolute sensitivity threshold Larger field of view |

Table 2: Zaber CC01 and typical sCMOS cameras compared.

* Full frame acquisition at minimum exposure time

Cooled Cameras

To reduce image noise as much as possible and maximize their absolute sensitivity threshold, many research-oriented cameras use cooled image sensors. Cooled CMOS and EMCCD cameras can be much larger than uncooled cameras. In its default configuration, the Nucleus MVR inverted microscope supports C-Mount cameras with front dimensions up to 60 mm x 60 mm. For larger cameras, or super-resolution modules, the camera mount can pivot sideways, enabling cameras of any size to be mounted.

What is the difference between sCMOS, CMOS and CCD?

While there is no formal definition of what makes an sCMOS camera, this class of cameras has historically referred to CMOS cameras optimized for high sensitivity and low read noise. Many cameras marketed as sCMOS are cooled to lower the noise floor and improve their AST. Early generations of CMOS image sensors lagged behind the more mature CCD technology in terms of low-light and long exposure performance. As CMOS technology improved and manufactures began targeting research markets, the sCMOS term was adopted to help underscore the greatly improved performance of newer CMOS image sensors. While the term still persists, the current generation of CMOS sensors deliver vastly superior performance across a wide range of performance metrics compared to CCD sensors. Outside a few niche applications, CMOS sensors have displaced CCD sensors and many sensor manufactures have ceased producing new CCDs.